In the world of robotics, advancements in sensor technology have revolutionized the way robots perceive and interact with their surroundings. From basic sensors to cutting-edge advancements, these sophisticated devices provide robots with a clearer and more comprehensive view of the world.

By enhancing vision, enabling tactile interaction, and fusing data from multiple sources, advanced robotic sensors are paving the way for unprecedented capabilities in various industries and applications.

In this article, we explore how these sensors are giving robots a better understanding of their environment and transforming the field of robotics as we know it.

Key Takeaways

- Sensors have evolved from basic to advanced capabilities, enabling robots to perceive and interpret environmental cues.

- Advanced sensors, such as cameras with image recognition algorithms and Lidar sensors, provide object recognition and accurate obstacle detection.

- Sensor fusion integrates data from different sensors, improving accuracy, reducing errors, and extending perception range and adaptability.

- Advanced robotic sensors have applications in various industries such as manufacturing, healthcare, agriculture, transportation, and space exploration.

Evolution of Robot Sensors: From Basic to Advanced

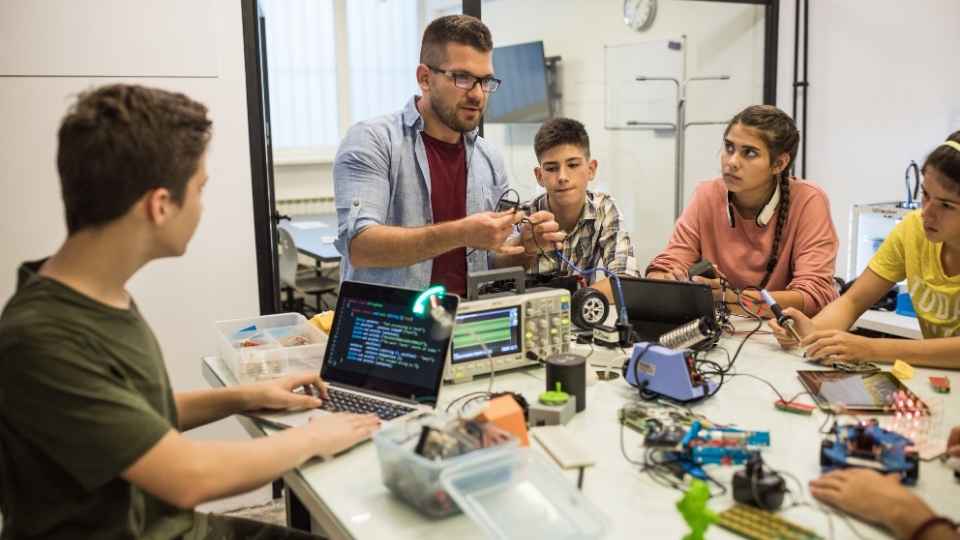

The evolution of robotic sensors has seen a significant shift from basic to advanced capabilities, allowing robots to gain a more comprehensive understanding of the world around them. In the early stages of robotics, sensors were limited to simple functions such as detecting obstacles or measuring distances. However, with advancements in technology, sensors have become more sophisticated and intelligent.

Advanced robotic sensors now possess the ability to perceive and interpret various environmental cues. For instance, cameras equipped with image recognition algorithms enable robots to recognize objects and navigate through complex environments. Lidar sensors utilize lasers to create detailed 3D maps of surroundings, enabling robots to accurately detect and avoid obstacles.

Furthermore, advancements in sensor fusion techniques have allowed robots to combine data from multiple sensors for a more accurate perception of their environment. This integration of information enables robots to make informed decisions in real-time situations.

Enhancing Perception: How Advanced Sensors Improve Robot Vision

Enhancing perception is achieved by leveraging cutting-edge sensor technology to significantly improve a robot's visual capabilities. Advanced sensors play a crucial role in enabling robots to better understand and interact with their environment.

Here are five key ways in which these advanced sensors enhance perception:

Higher resolution cameras provide sharper and more detailed images, allowing robots to distinguish fine details and recognize objects more accurately.

Depth sensors enable robots to perceive the distance between objects, helping them navigate complex environments and avoid obstacles.

Infrared sensors detect heat signatures, allowing robots to identify living beings or sources of heat even in low-light conditions.

Lidar sensors use laser beams to create detailed 3D maps of the surroundings, giving robots a holistic view of their environment for improved navigation and object recognition.

Force/torque sensors provide feedback on physical interactions, enabling robots to have a better understanding of their own strength and adjust their movements accordingly.

Tactile Sensors: Enabling Robots to Feel and Interact With the World

Tactile sensors are essential components that allow robots to perceive and interact with their surroundings through touch. These sensors provide robots with the ability to sense pressure, vibration, and temperature, enabling them to navigate their environment safely and effectively. By incorporating tactile sensors into their design, robots can perform tasks that require delicate manipulation or interaction with objects of varying textures.

Tactile sensors work by converting physical stimuli into electrical signals that can be interpreted by the robot's control system. They consist of arrays of small sensing elements that detect mechanical forces and transmit this information for processing. This feedback allows robots to adjust their movements based on the feedback received from the tactile sensors, ensuring accurate and precise interactions.

In addition to providing valuable information about the robot's immediate surroundings, tactile sensors also play a crucial role in sensor fusion: combining multiple types of sensory inputs for a comprehensive view of the world. By integrating data from tactile sensors with other sensor modalities such as vision or proprioception, robots can have a more complete understanding of their environment, enhancing their autonomy and adaptability in various tasks.

Sensor Fusion: Combining Multiple Sensors for a Comprehensive View

Sensor fusion involves integrating data from different sensory inputs, enabling robots to have a more comprehensive understanding of their surroundings. This advanced technique combines the information gathered from various sensors, such as cameras, lidar, radar, and inertial measurement units (IMUs), to create a unified perception of the environment. By fusing these sensor outputs together, robots can overcome limitations inherent in individual sensors and obtain a more accurate and reliable representation of the world around them.

Benefits of Sensor Fusion:

Improved accuracy: Combining data from multiple sensors enhances precision and reduces errors.

Redundancy: Having redundant sensory inputs allows for better fault tolerance and robustness.

Increased perception range: Different sensors capture different aspects of the environment, extending the robot's perceptual capabilities.

Adaptability: Sensor fusion enables robots to adapt to changing environments by dynamically adjusting sensor weights or using alternative sensors when needed.

Environmental understanding: Integrating diverse sensor modalities provides a more holistic view of complex scenarios.

With sensor fusion techniques continually evolving, robots are becoming increasingly capable of perceiving their surroundings with greater clarity and accuracy. This advancement opens up new possibilities for autonomous navigation, object recognition, human-robot interaction, and many other application domains.

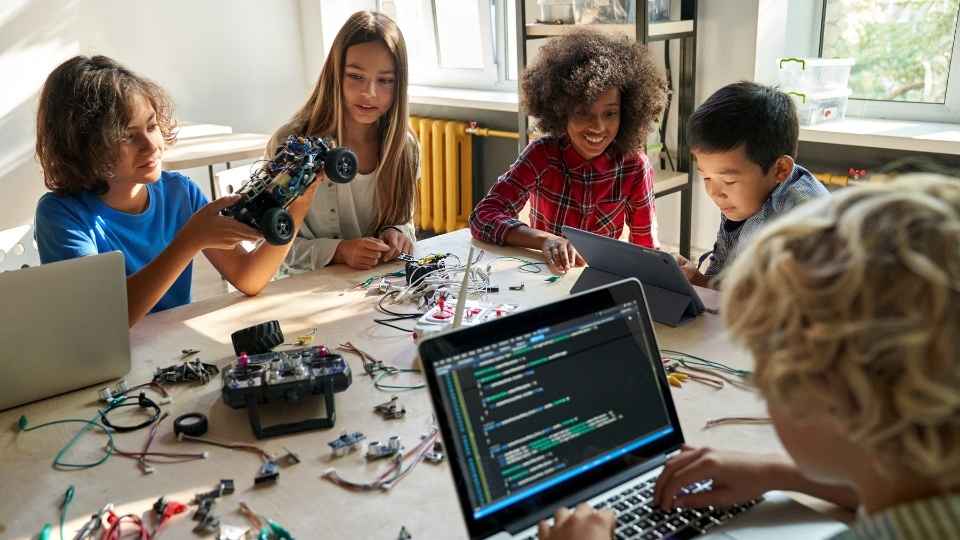

Applications of Advanced Robot Sensors in Real-World Scenarios

In real-world scenarios, the application of cutting-edge robotic perception technologies allows for a more comprehensive understanding and accurate interpretation of the surrounding environment. Advanced robot sensors play a crucial role in enabling robots to interact with their surroundings effectively. These sensors, such as LIDAR (Light Detection and Ranging), cameras, and depth sensors, provide valuable information about the physical world. For instance, LIDAR sensors emit laser beams that bounce off objects in their path and measure the time it takes for these beams to return. This data is then used to create detailed 3D maps of the environment. Cameras capture visual information that can be processed through computer vision algorithms to identify objects, recognize faces or gestures, and navigate complex scenes. Depth sensors help robots perceive distance accurately and avoid collisions with obstacles.

With these advanced sensors at their disposal, robots can perform tasks autonomously in various domains like manufacturing, healthcare, agriculture, transportation, and even space exploration. In manufacturing settings, robots equipped with advanced vision systems can detect defects on products or perform intricate assembly tasks with precision. In healthcare applications, robot nurses can use cameras to monitor patients' vital signs or assist doctors during surgery by providing real-time imaging feedback.

Moreover, agricultural robots leverage advanced sensing technologies to assess crop health through multispectral imaging or automate labor-intensive tasks like planting seeds or harvesting. Self-driving cars heavily rely on sensor data from LIDARs and cameras to perceive the road conditions accurately and make informed decisions while navigating through traffic.

The advancements in robotic perception technologies have opened up new possibilities for automation while ensuring safety and efficiency across industries. As these technologies continue to evolve rapidly, we can expect even greater strides in robotics capabilities in the near future—ushering us into a new era of freedom where intelligent machines seamlessly coexist with humans in our everyday lives.

Frequently Asked Questions

How Do Robotic Sensors Evolve From Basic to Advanced?

Robotic sensors have evolved from basic to advanced through continuous technological advancements. These advancements include improvements in sensing capabilities, such as increased accuracy, higher resolution, enhanced perception of the environment, and integration with other intelligent systems for better data analysis and decision-making.

What Are the Specific Ways in Which Advanced Sensors Improve Robot Vision?

Advanced sensors enhance robot vision through improved resolution, accuracy, and range. They enable robots to detect and recognize objects more effectively, navigate complex environments with precision, and adapt to changing conditions in real-time.

How Do Tactile Sensors Enable Robots to Feel and Interact With the World?

Tactile sensors enable robots to feel and interact with the world by providing them with the ability to detect and measure physical forces, pressure, temperature, and texture. This allows for enhanced dexterity, object manipulation, and safe human-robot interaction.

What Is Sensor Fusion and How Does It Combine Multiple Sensors for a Comprehensive View?

Sensor fusion is the process of combining data from multiple sensors to obtain a more comprehensive view. By integrating inputs such as vision, lidar, and infrared sensors, robots can gather rich information for enhanced perception and decision-making in diverse environments.

Can You Provide Examples of Real-World Scenarios Where Advanced Robot Sensors Are Applied?

Advanced robot sensors are applied in various real-world scenarios, from autonomous vehicles navigating complex environments to industrial robots performing precise tasks. These sensors provide accurate and detailed information to enhance the robots' perception and decision-making capabilities.

Basic Electronics ConceptsEssential ToolsCircuit Design BasicsMicrocontrollersDIY Electronics ProjectsRoboticsPrivacy PolicyTerms And Conditions

Basic Electronics ConceptsEssential ToolsCircuit Design BasicsMicrocontrollersDIY Electronics ProjectsRoboticsPrivacy PolicyTerms And Conditions